Introduction

In part 2, “De-Mystifying Tungsten Cluster Topologies: CAA vs. MSAA,” we covered the difference between Composite Active/Active (CAA) and Multi-Site Active/Active (MSAA) topologies.

In this blog post we will highlight the key differences between Composite Active/Passive (CAP), Composite Active/Active (CAA) and Dynamic Active/Active (DAA) topologies.

In the below table, we provide a quick summary of the key points for comparison:

| Composite Active/Passive | Composite Active/Active | Dynamic Active/Active | |

|---|---|---|---|

| Pros | Simplicity of application adoption and near fully automated continuous operations. | Fully automated continuous operations and ability to write to multiple Primaries simultaneously. | Fully automated continuous operations and simplicity of application adoption because the risks of write conflicts and failover flapping are not present. |

| Cons | By design to prevent network-related failover flapping, a command is required to route writes to a different Cluster. | Application must be active/active aware to reduce risk of write conflicts. | One active Primary receiving writes at a time. |

Composite Cluster Shared Characteristics

In all Composite clusters, there is a minimum of one cluster containing a Primary writable MySQL host and some number of Replicas. There are normally two or more clusters, which may span physical locations and/or cloud providers.

- Key concept: Managed cross-cluster replication

- One Tungsten Clustering installation per node (/opt/continuent)

- The Tungsten Replicator software package is not needed at all

- One set of commands only (/opt/continuent)

- Tungsten Managers see all clusters

- Connectors know about all clusters, both local and remote, and can route traffic anywhere

- The Tungsten Managers fully control the cross-cluster replication services

- Single software package to configure for start at boot

- Entire cluster can be easily managed with Tungsten Dashboard, a powerful web-based GUI

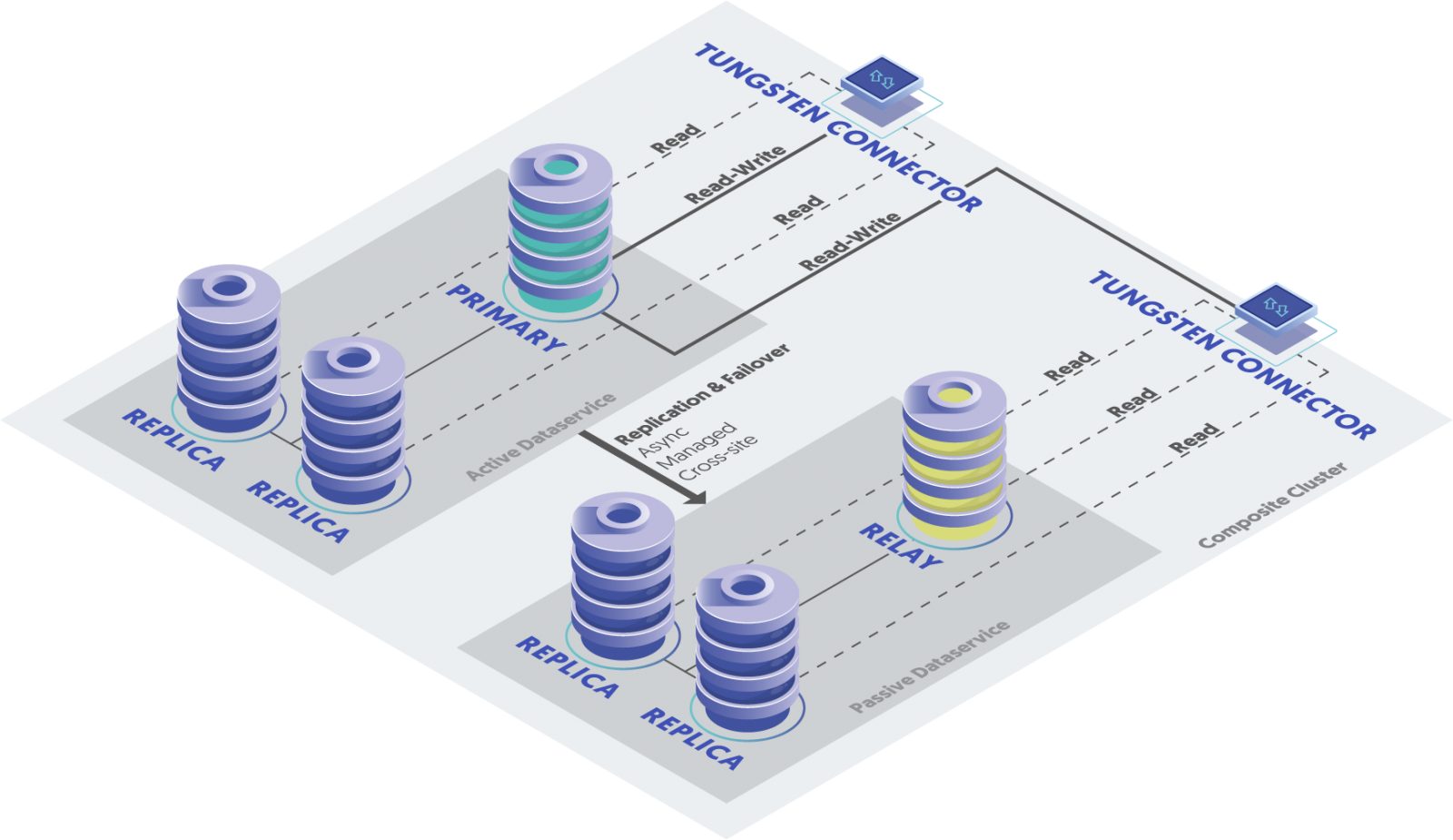

Composite Active/Passive Defined

- There is only one Active cluster at a time - Connectors route all writes to the same Primary node in the Active cluster, regardless of the originating cluster

- Connectors may be configured to read locally via read affinity settings, typically per-connector via the `user.map` configuration file

- The main advantage of this approach is that the number of possible conflicts is very low since a single MySQL server is responsible for all writes, regardless of the origin

- All Passive clusters have a special Relay node to efficiently pull the write events from the Active cluster to all local Replicas for replication into the MySQL servers

- In the event of a cluster-wide failure, human intervention is required to move the Active role to another cluster

- Requires the `cctrl> failover` command

- Designed to prevent failover flapping

- This may be seen as an advantage or as a dis-advantage, depending on the business’ use case (more on this later ;-)

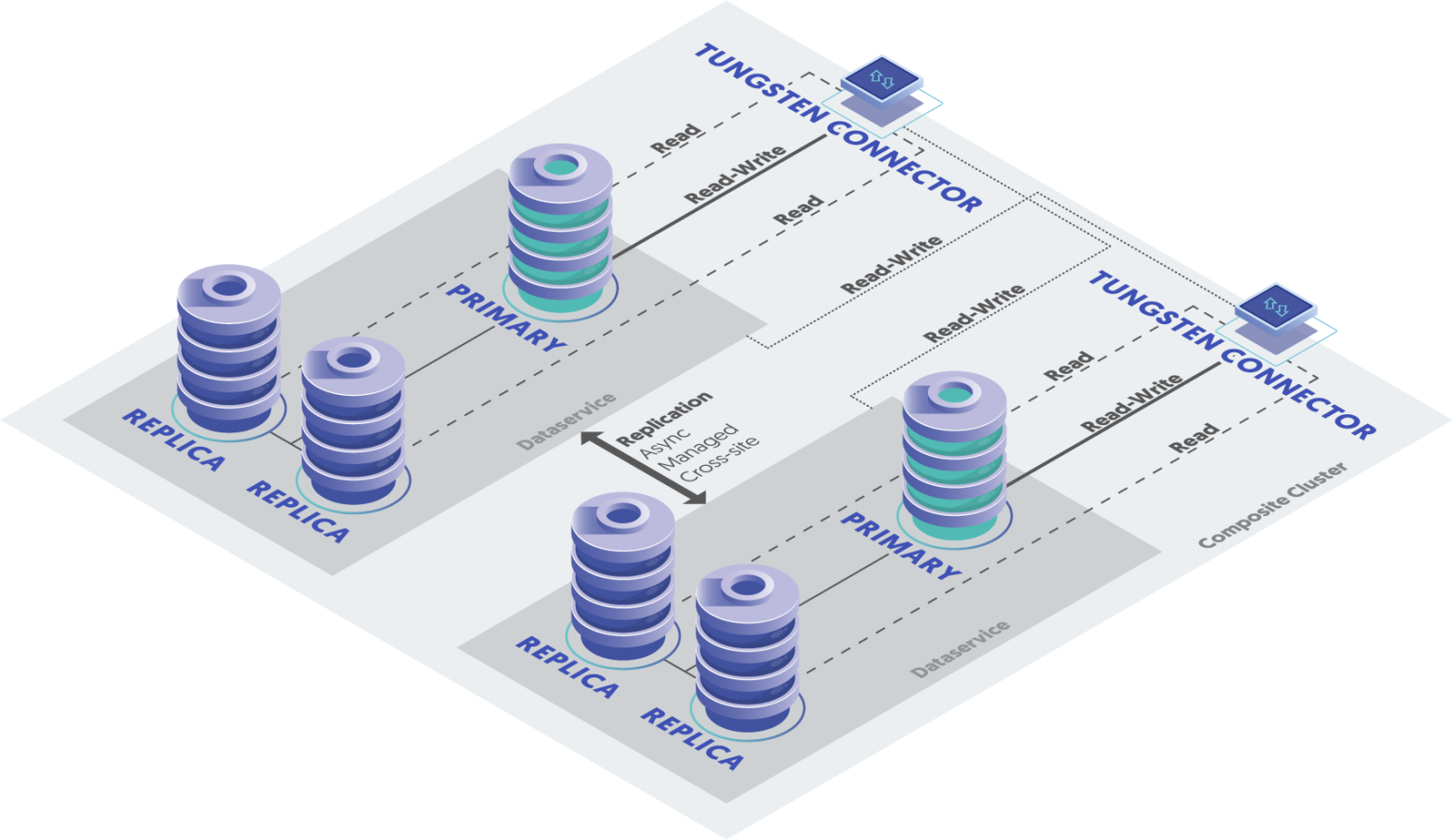

Composite Active/Active Defined

- All clusters are Active at the same time, so each cluster has a writable Primary and some number of Replicas

- Connectors normally route reads and writes to the local Primary, and optionally sends reads to local Replicas

- In the event of a cluster-wide failure, the Connector will automatically shift writes to another cluster, and optionally shift writes back to the original cluster when it becomes available

- No human intervention is required to shift write operations to another cluster, providing truly automated Continuous Operations

- The Connector behavior is controllable via both read and write affinity settings

- Connectors may be configured with read and write affinity at the cluster level or per-connector

- Cross-cluster replication services are created automatically at install time

- Cross-cluster services may be controlled via `cctrl`

- Cross-cluster services are named “{LOCAL_SERVICE_NAME}_from_{REMOTE_SERVICE_NAME}”

- Example: `london_from_nyc`

Composite Active/Active versus Composite Active/Passive

The key differences between CAP and CAA are:

- CAP has a single writable Primary in a single Active cluster, while all clusters in CAA are writable

- CAP writes all go to the same Primary node in the single Active cluster, whilst CAA writes go to the local Primary by default (and if it is available), and to another available cluster otherwise

- If the Active cluster fails in CAP, human intervention is required to activate a different cluster as Active; with CAA, the Connector automatically routes writes to another available server based upon configurable rules

- CAA has a higher risk for data integrity issues

- Due to the merged asynchronous writes from multiple source clusters

- CAP allows for simplicity of application adoption because the risk for write conflicts inherent in CAA is not present

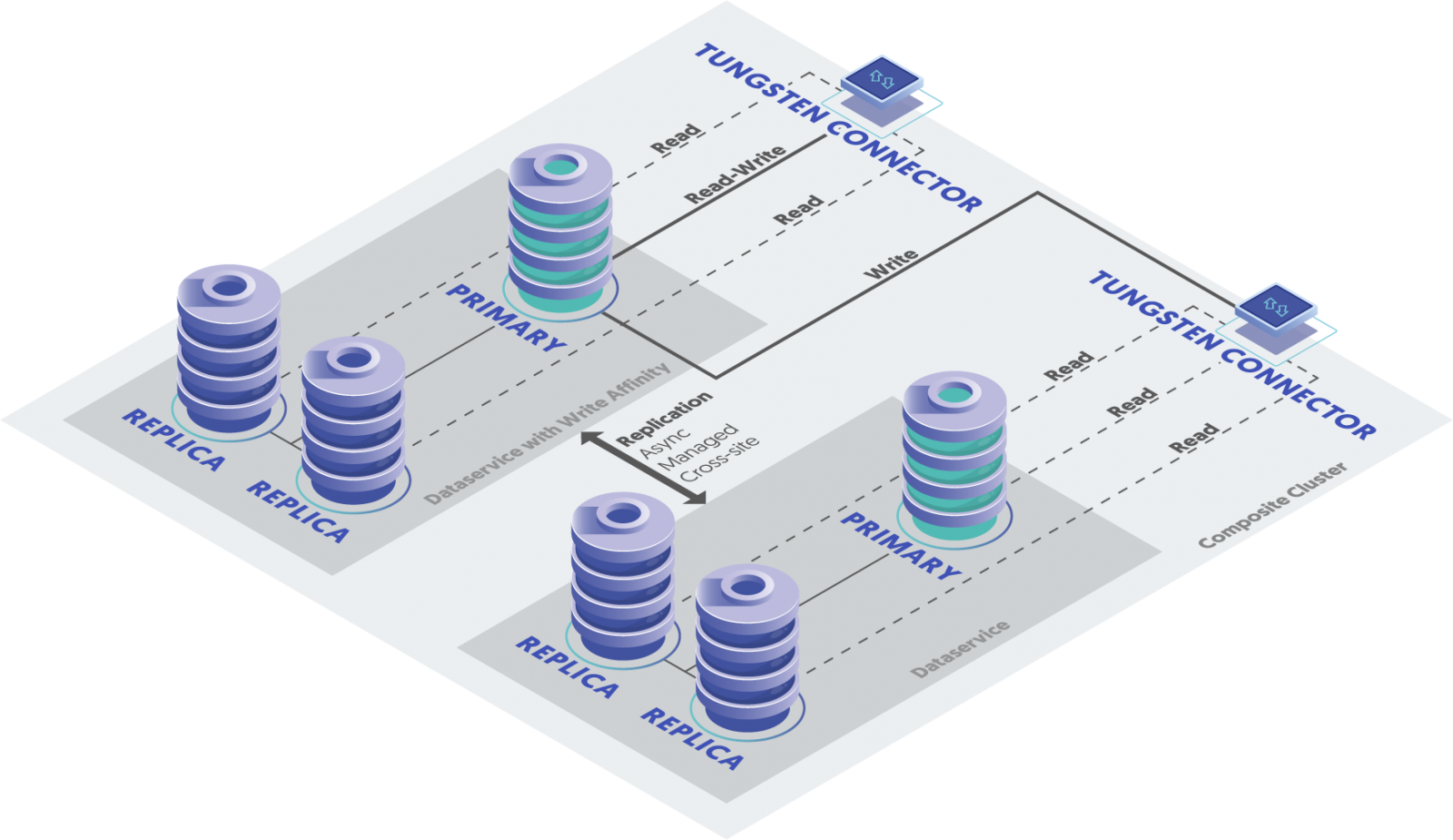

Dynamic Active/Active Defined

- All features from CAA apply

- DAA gives the simplicity of deployment and data integrity of CAP, with the automated Continuous Operations of CAA

- All clusters are Active at the same time, so each cluster has a writable Primary and some number of Replicas

- Connectors normally route writes (and reads) to a single Primary in a preferred cluster, and optionally sends reads to local Replicas, just like CAP behavior

- In the event of a cluster-wide failure, the Connector will automatically shift writes to another cluster, and optionally shift writes back to the original cluster when it becomes available, just like CAA behavior

- No human intervention required to shift write operations to another cluster, providing true automated Continuous Operations

- Major benefit is that this mimics the simplicity and data integrity of the CAP topology since all writes are sent to a single Primary MySQL server, even though all clusters are available for writes

- To deploy, set up CAA and specify two cluster-wide `tpm` configuration options:

- `connector-write-affinity=yourClusterA,yourClusterB`

- `connector-reset-when-affinity-back=true`

- From the Tungsten documentation:

“The benefit of a Composite Dynamic Active/Active cluster is being able to direct writes to only one cluster, which avoids all the inherent risks of a true Active/Active deployment, such as conflicts when the same row is altered in both clusters. This is especially useful for deployments that do not have the ability to avoid potential conflicts programmatically.”

Composite Active/Passive versus Dynamic Active/Active

“DAA gives the simplicity of deployment and data integrity of CAP, with the automated continuous operations of CAA”

- DAA Connectors automatically reroute writes to another available cluster if the current Active cluster fails, while CAP requires human intervention to move the Active role to another cluster

Composite Active/Active versus Dynamic Active/Active

“DAA gives the simplicity of deployment and data integrity of CAP, with the automated continuous operations of CAA”

- CAA clusters normally have writes going to the local Primary, while in DAA, all writes are routed to a single Primary, mimicking CAP behavior

- DAA allows for simplicity of application adoption, lowering the risk for write conflicts

Wrap-Up

In this blog post we will explored the key differences between Composite Active/Passive (CAP), Composite Active/Active (CAA) and Dynamic Active/Active (DAA) topologies, and we learned that DAA is the best compromise for Continuous Operations, Data Integrity and simplicity of application adoption.

If you’re a Continuent customer, reach out to Zendesk Support to learn more. Otherwise, reach out to the Continuent Team to start a conversation about your MySQL clustering needs.

Comments

Add new comment